In CDISC SDTM, values for non-standard variables (i.e. sponsor-defined variables) need to be submitted in separate data sets, the so-called "supplemental qualifier" or "SUPPQUAL" data sets.

So for example, if you have a variable "ENDPOINT" in your lab data, you do not submit these in your LB data set, you submit them in a SUPPLB data set.

The reason for this is an historical one: in the past, there was no way to "mark" non-standard variables in the data sets, as these needed to be provided in SAS Transport 5 format. You could of course mark them as such in the define.xml file, but as the define.xml format and the SAS Transport 5 format are pretty incompable, tools like the SASViewer could not elaborate this meta-information. So you could indeed put values for non-standard variables in the parent data set, but the viewer would not e.g. color them differently, unless of course you wrote a special viewer. No one however wanted to invest in such a venture, for understandable reasons.

Everything changed when the Dataset-XML format was published. It replaces SAS Transport 5 and is based on as well CDISC ODM, as define.xml, and perfectly aligns with the latter. So you can now just put the values of non-standard variables (like the "ENDPOINT" variable in our case) in the normal data set, and just tell that this is a non-standard variable in the define.xml. As Dataset-XML and define.xml perfectly work together, tools extract the metadata from the define.xml and apply this to the variable values when loading the values themselves from the Dataset-XML files.

This allows to e.g. do data validation during loading, like checking whether the to-be-loaded value is of the right data type, whether it obeys to its associated codelist, and so one (I could write several pages about this ...).

For non-standard variables, if you add 'Role="SUPPLEMENTAL QUALIFIER"' to your non-standard variable's ItemRef, then the system knows that this is a non-standard variable and can e.g. color it differently in the viewer. That is how we implemented it in the "Smart Dataset-XML Viewer".

Now, keeping the non-standard variable in the parent data set instead of "banning them" to a SUPPQUAL data set is not yet allowed by SDTM. This might change once the FDA starts accepting Dataset-XML as an exchange format.

Currently, the FDA is piloting the use of Dataset-XML, but still wanted the participants of the pilot to submit their data in almost exactly the same way as when using SAS Transport 5, i.e. with non-standard variables in SUPPQUAL datasets, which I think is a lost opportunity.

So I was looking into a way to bring non-standard variables back to their parent data set during loading in the "Smart Dataset-XML Viewer". When you have a SUPPLB however with 65,000 records, this would not be easy (i.e. it would be time consuming), as for each SUPPLB record, you need to find the parent record in the parent LB data set, which itself also has over 60,000 records. So I started thinking about a "smart" solution.

This weekend I found it. I observed that in most SDTM datasets, the records are more or less ordered by USUBJID. So if you first do a quick scan over all the records of the LB data set, and for each unique USUBJID keep the first and last occurrence row number, and keep this information in an "hashmap", you later only need to search for the parent record within this limited range.

To my surprise, generating the hashmap only took 25 milliseconds, although the 60,000 records need to be scanned. Merging the non-standard variable values back into their parent data set then became very efficient, a speed increase by a factor of over 20 was observed. As such, it took less than 2.5 minutes to merge the 65,000 SUPPLB records back into the 60,000 LB records. Here is a screenshot:

The variables "ENDPOINT" and "LBTMSHI" are the non-standard ones, and although they were loaded from the SUPPLB data set, they nicely show up in the LB table (I moved the columns to the left for better visibility).

The "trick" that made this possible was essentially creating sort of an index on USUBJID.

So I wonder whether the reviewers at the FDA create indexes on their (SAS?) tables in their review systems to be able to easily combine information from different tables. Do they?

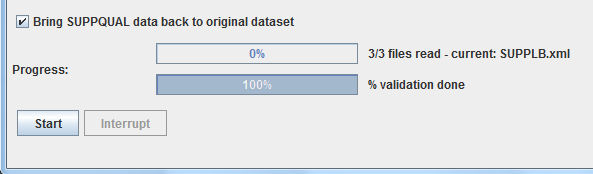

P.S. I will release this new version of the "Smart Dataset-XML Viewer" on Sourceforge in the next few days. In that release, "bringing back SUPPQUAL data to their original data set" will be an optional feature (it still takes extra time). So you will have a checkbox for switching the feature on or off (default is off):

It would be great if someone would then try out this new feature on data sets that have millions of records, and then report back to me!

I just uploaded the new version of the viewer to:

ReplyDeletehttp://sourceforge.net/projects/smart-sds-xml-viewer/files/