Last week, I have been attending a LOINC conference in Annecy, France, at a beautiful location at the banks of the Annecy lake, made available

by bioMérieux, a worldwide acting "in vitro diagnostics" (IVD) company with over 13,000 employees.

On this occasion, I gave a presentation titled "The use of LOINC and UCUM

in clinical research. 3 years after Barcelona, where are we now?"

The conference was rather different than the CDISC conferences I often attend ...

The first day (which I did not attend), was an "education day". There was only one stream, and it was completely

free of charge for people who also attended the conference. Well, one can say it was part of the conference.

The next three days were then allocated to presentations and discussions, including an open "LOINC committee meeting". The latter could be attended by any conference participant too. In the committee meeting, the roadmap for the following years is discussed, and the accomplishments of the different sub-committees presented and discussed. This makes LOINC as an organization (under the hood of Regenstrief) much more transparent than e.g. CDISC.

What was interesting for me was that the LOINC community is much more self-critical than the CDISC community. My impression of CDISC conferences is often too much that about half of the participants attend the conference to hear about what the FDA wants from them, and to learn about how to satisfy the FDA in the best way possible. They are not critical at all regarding what is happening within CDISC or about the roadmap for the future.

The LOINC community however is much more agile and self-critical: there is a lot of discussion about how the standards can be improved, can be applied, and especially, can be promoted.

Also, there is much more willingness to cooperate with other organizations, and even to drop certain parts of the own standard when another organization has a better solution. This was nicely demonstrated

by the new LOINC-SNOMED agreement, which will lead to development of mappings of individual LOINC concepts to SNOMED terms, especially for the "LOINC parts". Just as an example for what this means, for LOINC code

1751-7 "Albumin [Mass/volume] in Serum/Plasma", the LOINC "Component" part is "Albumin", which has the SNOMED-CT code 52454007.

This doesn't however mean that the LOINC test codes would

be replaced by a SNOMED-CT codes. At the contrary: the agreement is clearly under the flag "LOINC for tests, SNOMED for results and concepts". Having all the LOINC parts concepts as SNOMED terms will enormously improve

semantic interoperability, and allow for applications that automate the creation and application of medical knowledge.

Essentially, CDISC should also have been part of this agreement, but as we all know, LOINC and surely SNOMED-CT are still too much "not invented here" at CDISC, still strongly hindering interoperability between research and healthcare.

From the presentations at the conference, we also see that LOINC and UCUM (a notation for units, far superior to the CDISC "unit list") is becoming not only more popular, but also more and more becoming mandated by law in numerous countries (such as my home country Austria). As one US colleague revealed, in the US, a very large healthcare insurance company ("payer") may fine labs when not providing lab results with units that are UCUM conform. Should CDISC also be fined for refusing to use UCUM?

There were also some interesting presentations about SHIELD, the FDA program for "Standardization

of Lab Data to Enhance Patient-Centered Outcomes Research and Value-Based Care", which is, among others, also meant to make lab data more usable in research. In this project, the FDA works together with Regenstrief/LOINC,

Centers for Disease Control and Prevention (CDC), National Institutes of Health (NIH), Office of the National Coordinator for Health Information Technology (ONC), Centers for Medicare and Medicaid Services (CMS), US Department

of Veterans Affairs (VA), IVD manufacturers, EHR vendors, laboratories, College of American Pathologists, and others.

Prominently absent in the list is ... CDISC. Maybe this is due to as SHIELD recommends a number of standards that are all "not invented here" at CDISC?

CDISC is using LOINC, but only for classic lab tests (which excludes microbiology tests), and only because the FDA mandates it. Especially for COVID-19 studies, my personal opinion is that the LOINC code

of the microbiology test should be submitted, i.e. for this kind of studies, it should be mandated that MBLOINC is populated. I don't think CDISC will agree.

But LOINC is much more than just lab tests. It has

over 800 codes for vital signs, large number of codes for ECG tests, questionnaires and questions in it, microscopy, radiology, etc. etc..

My own impression (personal opinion!) is that CDISC (at least the SDTM team) actively

tries to keep LOINC "out of the door": there are so many places in the SDTMIGs that state "the following qualifiers would generally not be used for this domain: ..., --LOINC, ..." where it completely makes sense to have the LOINC code, as the latter (as it is pre-coordinated, i.e. "plan in advance") is a much

better and unique designator of the test than the combination of the post-coordinated (categorize afterwards) CDISC variables and their CT.

Another sign of this is that in the CDISC "Therapeutic Area User Guide for

COVID-19", "LOINC" is only mentioned for classic lab test (LBLOINC), but not at all for virus-related (microbiology) tests (MBLOINC), although it would be enormously advantageous to have the LOINC code there.

So, for me, the question arises "is CDISC afraid that FDA would come to the idea to mandate the use of MBLOINC for COVID-19-related tests"?

Back to the conference...

There were so many interesting presentations, including one from a representative of NIH/NLM with a slide about the use of LOINC outside healthcare:

also stating that all major research databases in the US use LOINC to combine data from many sources (and not only for classic lab tests). Prominently absent is again CDISC.

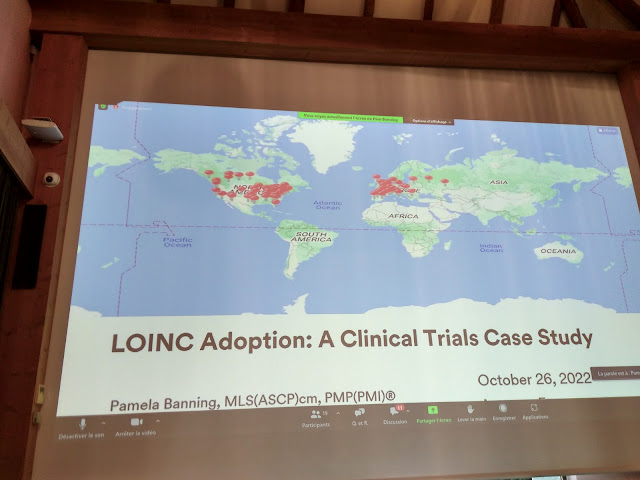

A very interesting presentation was from Pam Banning from 3M titled "LOINC Adoption: A Clinical Trials Case Study". Her team was hired by a sponsor company to make all the labs that are involved in the clinical research projects "LOINC ready". The sponsor made this decision based on the 2021 FDA Guidance. The project involved not less than 144 sites (!) spread over North America and Europe:

where lab tests were reported in different languages (English, Spanish, Portuguese, ...).

The mapping effort from local codes/designations was manageable, as only a relative small set of lab tests

need to be coded to LOINC. More difficult was the information exchange and the communication with and between all the sites.

Although CDISC doesn't really encourage LOINC usage, it is also our experience that sponsors are currently picking up LOINC rapidly, and also start using it beyond the use case of FDA submissions. For

example, we observe that more and more sponsors start using and requiring LOINC to their sites and labs to strongly improve data quality, as it allows data quality assurance being performed on lab results. Also the use

of UCUM (although not used within CDISC) is rapidly growing at sponsors, also as the combination of LOINC and UCUM allows automation of "US Conventional" to "SI" units, which is not possible with CDISC

units.

I will not explain this here, as this topic on itself provides sufficient material for a separate blog entry, and it is already partially covered by an earlier blog.

Unfortunately, I couldn't attend the last day of the conference, but the LOINC organization will make recordings of all presentations available to the participants in the next weeks, so it may be that I further extend this blog entry or add some additional comments.

Your comments are of course also very welcome!